We’ve been having issues serving build log updates on time on Travis

CI. First up, we apologize for this issue, especially

given that it’s not the first time it happened. We had a similar issue last

Thursday, where our log updates queue got clogged up to 25000 messages and only

slowly caught up, very slowly.

Yesterday, we had a similar issue, only this time, the queue stalled until more

than 100000 messages were stuck and not being processed. Therefore, most build

logs from yesterday remained empty.

In general, we’ve had multiple occasions where log processing just couldn’t keep

up with the incoming number of messages.

What happened?

In an effort to improve log processing in general, we put a new component in

place yesterday that was supposed to improve log processing by parallelizing the

log updates. Unfortunately things didn’t go as expected and the queues started

to fill up.

After investigating the metrics we have in place we found that the issue was

that parallelization wasn’t in effect, so we fixed this issue and finally log

processing caught up. The 100000-ish messages were processed in less than an

hour.

Here’s a graph outlining what happened in the log processing and how things

evolved when we finally found and fixed the issue:

For most of the day, processing got stuck at around 700 messages per minute.

Given that we get a few hundred more than that every minute, backed-up queues

are a very likely thing to happen. At around 18:25, we deployed a fix that made

sure parallelization works properly, and at around 18:40, we ramped up

parallel processing even more so that we ended up processing 4000 messages per

minute at peak times.

Technicalities

After last week’s incidents we decided to take measures to improve the log

processing performance in general. Until last week, our hub component was

processing all log updates, and all of them in just one thread per build platform

(PHP, Ruby, Erlang/Java/Node.js, Rails).

At peak times we get about 1000 log updates per minute, which doesn’t look like

a lot, but it’s quite a bit for a single thread that does lots of database

updates.

As a first step we moved log processing into a separate

application. Multiple threads work

through the log messages as they come in, therefore speeding up the processing.

Jobs are partitioned by their key, so we make sure that log ordering is still

consistent. It’s still not perfect because a) it’s not distributed yet and b)

jobs with a lot of log output can still clog up a single processor thread’s

queue.

We weren’t sure if this would affect our database load at all, but luckily, even

with nine threads, there was no noteworthy increase in database response time.

Thanks to our graphs in Librato Metrics, we could

keep a close eye on any variance in the mean and 95th percentile.

The Future

We’ve been thinking a lot about how we can improve the processing of logs in

general. Currently, the entire log is stored in one field in the database,

constantly being updated. The downside is that on every update, in the worst

case, the column has to be read to be updated again.

To avoid that, we’ll be splitting up the logs into chunks and store only these

chunks. Every message gets timestamped and a chunk identifier based on the

position in the log. Based on that information, we can reassemble the logs

from all chunks to display in the user interface while the build is running.

With timestamps and chunk identifiers we can ensure in all parts of the app that

the order of log handling corresponds to the order of the log output on our

build systems.

When the build is done, we can assemble all chunks and archive the log on an

external storage like S3.

Again, our apologies for the log unavailability. We’re taking things step by

step to make sure it doesn’t happen again!

Just short of four months ago, we announced the availability of pull request

testing on

Travis CI. Just recently we announced the availability of pull requests for

everyone

and all of their projects by default.

Since then, travisbot has been busy, very busy,

leaving comments on your pull requests, helping you make a fair judgement

of whether a pull request is good to merge or not. We have built more than 17000

pull requests since we launched this feature. We salute you, travisbot, for

never letting us down!

Today, and largely thanks to the fine folks over at GitHub, pull requests are

getting even more awesome. Check out the full story on the GitHub

blog.

Instead of relying on travisbot to comment on pull requests to notify you of the

build status, pull requests now have first class build status support.

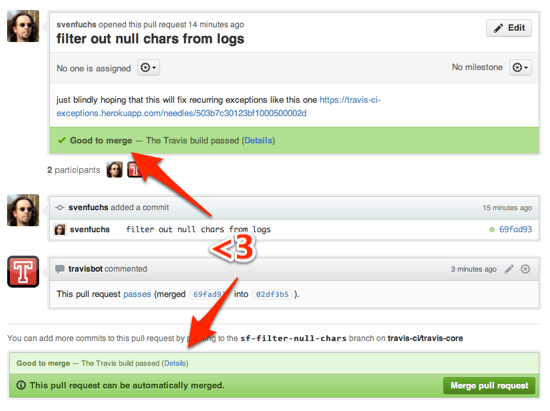

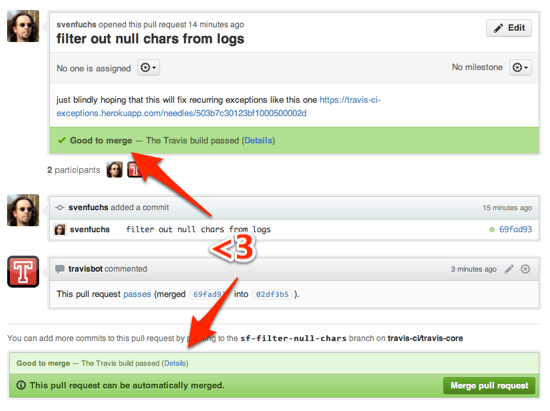

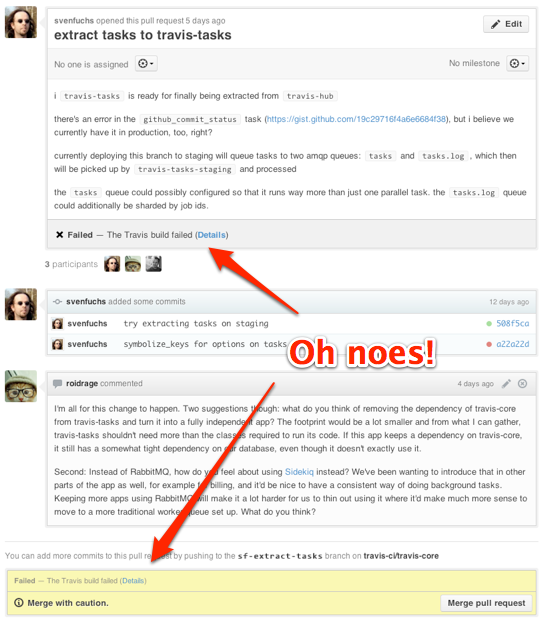

What does that mean? A picture says more than thousand words. Here’s how every

pull request looks like when it’s successfully built on Travis CI. All green,

good to merge!

It is just as awesome as it looks. But you should try for yourself immediately!

When a new pull request comes in, we start testing it right away, marking the

build as pending. You don’t even have to reload the pull request page, you’ll

see the changes happen as if done by the magic robot hands of travisbot himself!

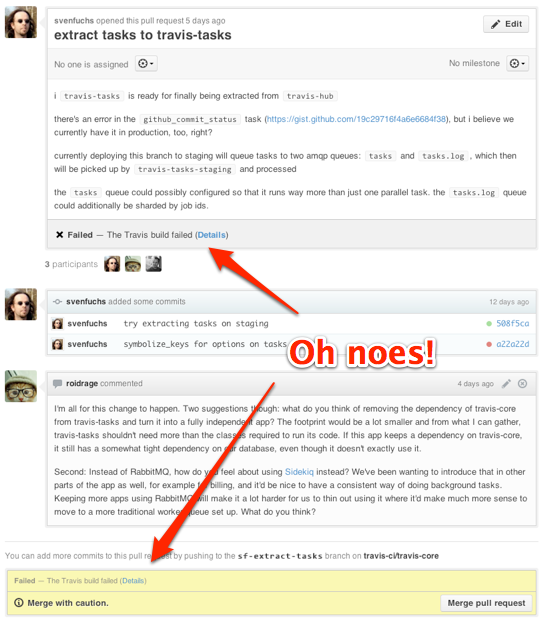

Should a pull request fail the build, as unlikely as it may seem, you’ll see a

warning about merging this pull request. This is true for a

pending build as well. They’re both marked as unstable. You can merge it, but

you do so at your own risk. After all, isn’t it nicer to just wait patiently for

that beautiful green to come up? We thought so!

In all three scenarios, there’s a handy link included for you, allowing

you to go to the build’s page on Travis to follow the test log in awe while you

wait for the build to finish. Just click on “Details” and you’re golden!

There is a neat feature attached to this. The build status is sneakily not

attached to the pull request itself, but to the commits included in it. As a

pull request gets more updates over time, we keep updating the corresponding

commits, building up a history of failed and successful commits over time. This

is particularly handy for teams who iterate around pull requests before they

ship features.

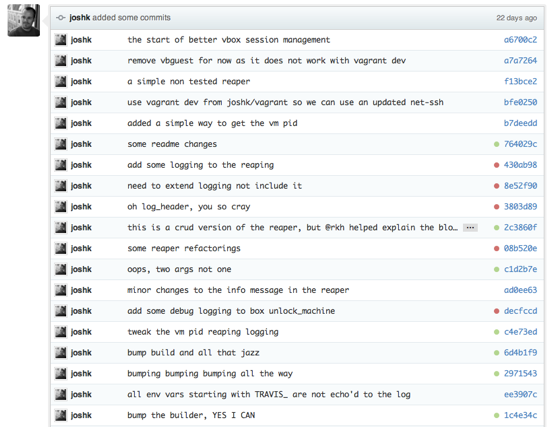

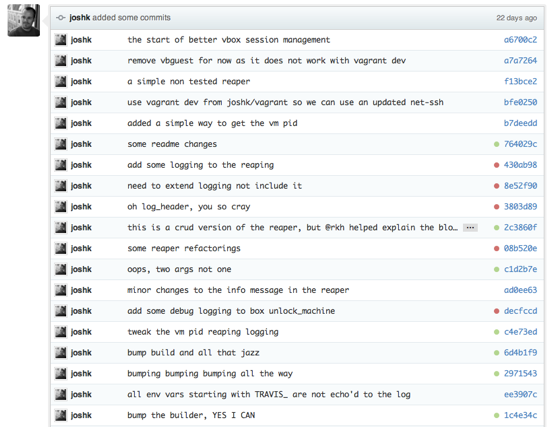

Let’s have a look at what Josh has been up to in this pull request. Notice the

little bubbles next to each commit reference.

Want to have a look at what it looks like for real? No worries, here’s a pull

request on the rspec project,

here’s one from Mongoid, and

here’s one from the Zend Framework project.

The great news is that this awesome feature has been active on Travis CI for a

while now, meaning hundreds of existing pull requests will immediately have a

build status attached and displayed in the user interface. This is true for open

source projects on Travis CI and for private projects on

Travis Pro.

Due to an unfortunate issue that we failed to notice early on, pull requests

opened around Thursday and Friday of last week unfortunately weren’t properly

updated on Travis CI at the time. If they got any new

commits or updates to the pull request in the mean time, that should be fixed by

now. We apologize for the slightly reduced show effect of this new hotness.

Now, the bad news is that this means that travisbot is going to retire from

commenting on your pull requests soon. You all learned to love him just as much

as we do, and he might just have a comeback at some point in the future. Until

then, he’ll be hanging out in our Campfire room, enjoying a little less chatter

around him.

Note that this build status awesomeness only works if we have a user with

administrative rights set up for the repository in question. We can’t update it

if we don’t have admin rights unfortunately. If you have set up a repository

where you’re not a user with admin rights, you need to find someone who does and

have them log in to Travis, we’ll sync the permissions automatically and use

their credentials.

Thank you, GitHub!

An important part of Travis CI is our CI environment: all the runtimes, tools, libraries and system configuration that

projects rely on to run their test suites. While considered to be the most mature part of Travis CI (we are at v5.1 at the moment),

it still moves fast. Today we want to give you a heads-up on important recent and upcoming changes:

- CI username change

- Disabling some services (e.g. MongoDB, Riak, RabbitMQ) on boot

- Migration to Ubuntu 12.04

- Migration to 64 bit VMs

CI Username Change

On August 25th, we deployed new VM images that change CI username from vagrant to travis. If your project depends on

- The exact system username

- or

$HOME pointing to /home/vagrant

then you need to update your .travis.yml and/or build scripts to use the environment variables USER and HOME instead. Depending on exact values of those variables

is usually not necessary: the best way to detect that you are running in the Travis CI environment is by checking if either (or both) CI and TRAVIS env varibales

are set. If you feel adventurous, feel free to use HAS_JOSH_K_SEAL_OF_APPROVAL instead (Josh K is a real person).

Disabling Most Services on Boot

Currently when we boot the VMs we use, a number of services are started:

- MySQL

- PostgreSQL

- RabbitMQ

- MongoDB

- Redis

- Riak

- CouchDB

and so on. Each might individually consume a small amount of resources, but in total they consume a non-trivial amount of RAM. This limits both

the amount of RAM available to your test suites and our ability to move some parts of the environment (for example, MySQL and PostgreSQL data directories)

to RAM-based file system mounts to speed up test suites that are very heavy on I/O and in particular random access I/O (think Ruby on Rails or Django).

Tuning configuration of services to consume less RAM is possible but it is very hard to pick good defaults for all

of them.

In addition, most projects and test suites don’t use these services. Because of this we will be turning off most services

on boot, leaving only MySQL and PostgreSQL running. Note that we already do this for some services

(for example, Cassandra, Neo4J, ElasticSearch).

If your project needs, say, MongoDB running, you can the following to your .travis.yml:

or if you need several services, you can use the following:

services:

- riak # will start riak

- rabbitmq # will start rabbitmq-server

- memcache # will start memcached

This allows us to provide nice aliases for each service and normalize any differences between names, like RabbitMQ for example. Note that this feature only

works for services we provision in our CI environment. If you download, say, Apache Jackrabbit and

start it manually in a before_install step, you will still have to do it the same way.

The change will go into effect on the 14th of September, 2012 and we encourage all Travis CI users to make changes to their .travis.yml as soon as possible as to avoid any issues, as well as being forward-compatible.

Distribution Versions: A Brief History Lesson

When we first started using virtual machines for Travis CI (around June 2011) we decided to use Ubuntu 10.04.

This worked perfectly, but by the fall of 2011 10.04 started showing its age. Our users kept asking for more

recent versions of certain tools and libraries which were challenging to provide without building and maintaining

a myriad of Debian packages. So in November 2011 we migrated all VMs to Ubuntu 11.04 which solved the problem.

And then in early April 2012 we migrated to 11.10.

Now it is August 2012 and the time to move on to 12.04 is drawing close. We want to explain briefly how Travis CI

will migrate to it, why we are doing it and what may change for your project.

Why Migrate?

With Ubuntu 12.04, we will be able to provide more up-to-date versions of tools and services in our CI environment,

including:

- MySQL 5.5

- CouchDB 1.2

- Updated Git

and many others. In addition, we hope to be able to provision Python 3.3 preview releases (there are 12.04 packages

we can use).

Staying One Step Behind, Intentionally

Our users are mostly software developers and they tend to like staying up-to-date with tools, services, libraries and

so on. However, production environments are rarely on the bleeding edge. So for CI in general, and Travis CI in

particular, it is important to maintain a balance: not too old, but not too new either. This is why Travis CI is

intentionally several months behind Ubuntu releases. It gives developers several months to catch up with recent

changes, fix issues and push out new releases.

Notable Changes in 12.04

12.04 is a significantly smaller change that 11.10 has been: no breaking changes to fundamental libraries like

OpenSSL, no [major or minor] GCC version changes, et cetera.

MySQL Server

12.04 provides MySQL Server 5.5. Most projects should keep working without any changes.

System Perl

System Perl version changes to 5.14. This won’t matter for Perl projects on Travis CI (we use a separate set

of Perls provisioned with Perlbrew) but projects in other languages that use Perl as

part of their build system may be affected.

System Erlang/OTP

System Erlang/OTP version changes to R14B04. This won’t matter for Erlang projects on Travis CI (we use a

separate set of OTP builds provisioned with kerl) but projects in other

languages that may rely on Erlang as part of their build system may be affected.

Bison 2.5

Projects that use Bison may need to check for 2.5 compatibility.

The Road To 12.04

Travis CI environment will transition to 12.04 in the first week of September, 2012.

Migrating CI Environment to 64 bit

The current Travis CI environment is 32 bit. This works fine for most cases but has a few downsides:

- The majority of developers and projects target 64 bit first because this is their deployment environment of choice.

- Some runtimes are primarily used in 64 bit environments and their 32 bit counterparts have stability issues that are outside of our control.

Because we already work towards freeing more RAM for project test suites to use, we decided it is a good time to also move to 64 bit.

The exact migration date is not yet decided, but most likely this will happen in late September or early October 2012.

Getting Help

If you have questions, please ask them on our mailing list or in

#travis on chat.freenode.net.

Happy testing!

The Travis CI Team